Datamoshing is a super fun effect to build and has been increasingly finding more creative usages both in real-time and rendered media. In part #1 of this series, we covered the high level implementation approach. Now it’s time to dive into TouchDesigner and start building our datamoshing component!

Read part #1

There have been a few weeks between part #1 and this post, so I’d highly recommend readers make sure they’ve gone over part #1 if you haven’t read it. If you have, it’s still a good refresher because we won’t be diving too deeply into the concepts again here. You can read part one below:

And before we dive in, a big shoutout to Ompu Co for their great blog post that has been the reference of this series:

Project setup

The first thing we’ll do is reference our overall architecture diagram from the last post:

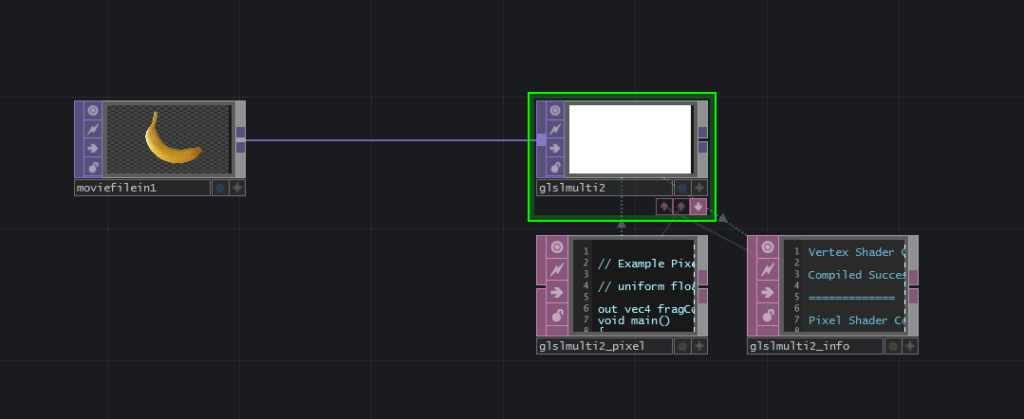

Based on this, we can actually sketch out most of the network before even worrying about any coding or parameter fiddling. We’ll start by adding a Movie File In TOP at the beginning of the network and plugging that into a GLSL Multi TOP:

Next we can go into the Palette (you can open it with Alt + L) and get the opticalFlow tool into our network and wire it up as our diagram shows. You’ll find it under the Tools section of the Palette:

The final setup element we need here is to create a feedback loop from the output of the GLSL Multi back into the 3rd input. We can do this with a Feedback TOP that is referencing the GLSL Multi TOP:

This is one of the great benefits of creating our flow chart from earlier. It really helps you get building quickly.

Select your movie

Because the nature of datamoshing requires some movement in the image, the banana is unfortunately a bad test asset. The nature clips that ship with TouchDesigner could work as there is slightly more motion, but in this case I’m going to use Count.mov since it has a lot of constant motion in it:

Talking through the setup code

This is where the magic happens. We’re going to take all the code from the original reference blog post below and convert all the Unity/shader code and bring it all into our GLSL Multi TOP. One of the important things here is patience, as we’re going to look through most of the lines of code even if we don’t fully understand them. Often the variable names, comments, and code structure will give us a lot of hints about what is happening and then we connect those dots in our mind to their TouchDesigner equivalents.

The first code block we see in the blog article is one that isn’t a shader but instead is a script in Unity and it looks like this:

using UnityEngine;

[ExecuteInEditMode]

[RequireComponent(typeof(Camera))]

public class DatamoshEffect : MonoBehaviour {

public Material DMmat; //datamosh material

void Start () {

this.GetComponent().depthTextureMode=DepthTextureMode.MotionVectors;

//generate the motion vector texture @ '_CameraMotionVectorsTexture'

}

private void OnRenderImage(RenderTexture src, RenderTexture dest)

{

Graphics.Blit(src,dest,DMmat);

}

}Now, don’t be afraid by this. A lot of folks see this and think “I don’t even know this language, how could I port it over??” And this is where the magic of good variable names comes into play. We can read through the variables and get a good idea of what kind of data is passing through different parts of the code.

For example the first few lines look similar to our Python import statements, so likely not useful to port:

using UnityEngine;

[ExecuteInEditMode]

[RequireComponent(typeof(Camera))]The next few links are basically setting up the object-oriented side of things, and we can see from reading the variable names that they’re creating a material for their datamoshing. We’ll be working in 2D, so we can even skip that:

public class DatamoshEffect : MonoBehaviour {

public Material DMmat; //datamosh materialThe next set of lines are interesting for our reference:

void Start () {

this.GetComponent().depthTextureMode=DepthTextureMode.MotionVectors;

//generate the motion vector texture @ '_CameraMotionVectorsTexture'

}Here we can see something interesting. Although I have NO idea how to write this code, by reading the variable names and function names I can determine:

When this script starts, it gets the motion vectors from the depth textures

Great! Even with no coding experience in Unity, we can derive this important information. Now again, because we’re working in 2D, we don’t particularly have a depth map, BUT we can generate motion vectors with our opticalFlow. If optical flow is doing this and feeding into our shader, then no need to worry on the code side of things other than sampling that texture when we need it.

Shader conversion

Now we’re getting to the real potatoes! The shader goodness that does all of the magic of the effect. What you might not expect me to say is that this is closer in process to converting a Shadertoy shader than it is to writing a new shader. What I mean is that we’re going to talk through each of the shaders and which elements will get converted into their TouchDesigner equivalents. When I did this process myself, I did go through and build each one of the shaders step by step, so we’re going to follow the same process here and I’ll talk through each shader step.

Get Our 7 Core TouchDesigner Templates, FREE

We’re making our 7 core project file templates available – for free.

These templates shed light into the most useful and sometimes obtuse features of TouchDesigner.

They’re designed to be immediately applicable for the complete TouchDesigner beginner, while also providing inspiration for the advanced user.

First example – Using Motion Vectors

Here’s the first shader code block. As the author mentions, this mainly just takes the motion vectors and colours the screen based on them.

sampler2D _MainTex;

sampler2D _CameraMotionVectorsTexture;

fixed4 frag (v2f i) : SV_Target

{

fixed4 col = tex2D(_MainTex, i.uv);

float4 mot = tex2D(_CameraMotionVectorsTexture,i.uv);

col+=mot;//add motion vector values to the current colors

return col;

}So what’s going on here? Let’s bang through it. The first few lines define a main texture and the motion vectors. In Unity, you need to define these as sampler2Ds at the top of your shader. In TouchDesigner, since we have our textures wired up to the GLSL Multi TOP, we’d reference this inside the main() function of our shader with sTD2DInputs[].

Then we get into the definition of our function, which in this case is fixed4 frag(v2f i), which means its a function that returns a fixed4 value and takes an input argument of i. We’ll figure out what i is in the next line. For now in TouchDesigner, we will almost always have a generic void main() function with no arguments and we’ll use vec4 data structures for our pixel data.

Then we get to the two lines that sample the incoming textures. One input thing we can notice here is that the variable i is being referenced in places where we want the UV. We know in TouchDesigner, we can rely on the built in vector called vUV to get our UV positions. We’ll also use a generic texture() function instead of the specific tex2D() function.

The last thing that happens here is the colour output is added with the motion vectors and output it. The adding line is just plain GLSL so nothing to change there. Then for the output we know in TouchDesigner we should feed our final output into the TDOutputSwizzle() function to ensure compatibility on different GPUs and systems.

If I was to convert all of this shader to be compliant in a GLSL Multi TOP, it would look like this:

out vec4 fragColor;

void main()

{

vec4 col = texture(sTD2DInputs[0], vUV.st);

vec4 mot = texture(sTD2DInputs[1], vUV.st);

col += mot;

fragColor = TDOutputSwizzle(col);

}You can see the structure is essentially the same, except we’ve swapped in our TouchDesigner equivalents for the elements in the Unity shader.

If you enter that into your GLSL Multi TOP and save it, we should a similar example now where our motion vectors, which are usually just the Red and Green channel of a texture are getting added to our original image like so:

Great so we’ve just finished our first example into the real implementation of this datamoshing example! In the next post we’ll dive through all the remaining examples and talk about special considerations we have in TouchDesigner.

Wrap up

Datamoshing is an exciting and fun technique to work with. While it is challenging to get setup in TouchDesigner, it’s valuable as both a technique and a learning exercise. The processes we’re going through here are the exact same workflows that the high-end pros use when they’re the first ones to implement fluids, physics, raytracing, path tracing, or any feature that isn’t in TouchDesigner. They find a reference of how things work in another language and they step through it and decipher what’s happening so they can port those over to TouchDesigner.